Generally, when you install a graphics card on your PC, the HDMI port on the motherboard gets disabled.

This is an issue for users who want multiple monitors but do not have sufficient HDMI ports on the graphics card. In this situation, the motherboard HDMI port becomes important for various displays.

Fortunately, there is a way to circumvent this issue via BIOS. However, there are a few key points, and aspects of your system that you need to check before enabling HDMI on the motherboard is possible.

This tutorial will look extensively at how to use motherboard HDMI with a graphics card plugged in.

TABLE OF CONTENTS

Your CPU MUST Have an Integrated Graphics Card

So before we even BEGIN talking about enabling the motherboard’s HDMI port, you MUST ensure your CPU has an integrated graphics card (aka iGPU).

Your motherboard HDMI port will NOT work if your CPU does not have an integrated graphics card.

This is because motherboards DO NOT have an onboard video processing chip. All the video output ports that are located on the back I/O panel of the motherboard are powered by the CPU’s integrated graphics card.

Unfortunately, specific CPU models lack an iGPU, so you must know this. Let us look at which models you should be mindful of for Intel and AMD CPUs separately:

Intel CPUs and Intel Integrated Graphics Cards (Beware of “F” CPUs)

Fortunately, if you have an Intel CPU, there is a high chance it would feature an integrated graphics card. Most Intel CPUs have an iGPU.

The only significant exceptions are the processors with an “F” Suffix in their model name, i.e., Intel Core i7-11700F, Intel Core i5 11400F, etc. These DO NOT feature an iGPU and, therefore, would not have the capacity to power the HDMI port, or any other video output port, on the motherboard.

In other words, if you have an Intel “F” CPU, you must have a dedicated graphics card for video output for your PC.

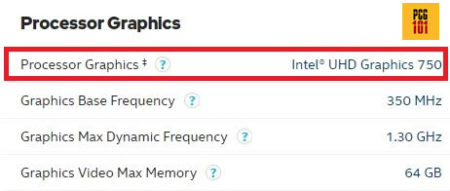

Intel integrated graphics card models include the popular Intel HD 4000 in ancient CPUs, Intel UHD 630, and Intel UHD 750 in recent CPU generations.

Also Read: Is Integrated Graphics Card Good?

AMD CPUs and VEGA Series iGPUs (Watch Out for “G” Suffix)

Unlike Intel, where most CPUs DO have an iGPU, the opposite is true with AMD.

With AMD, only the CPUs having the “G” suffix, i.e., AMD Ryzen 5 5600G, feature an integrated graphics card and thus have the capacity to power the motherboard HDMI ports.

If you have any other AMD CPU, your motherboard HDMI port will NOT work.

It should be noted that AMD “G” series CPUs offer powerful Vega series graphics cards. The newer Vega iGPU on 5000 AMD Ryzen processors is more potent than its Intel counterparts.

Also Read: PC Build Guide for Gaming PC Without a Dedicated Graphics Card

How to Check If Your CPU Has an Integrated Graphics Card or Not?

There are two ways to check whether your CPU has an iGPU or not:

- Check through the official spec sheet

- Check internally from the Operating System (Device Manager)

1. Checking Through the Official Spec sheet:

This method is relatively straightforward. If you know the make and model of your CPU, you can search it online for its spec sheet.

For instance, the following excerpt from the Intel website shows the Processor Graphics information about the Intel Core i7-11700K CPU. This CPU has the Intel UHD 750 iGPU.

2. Check Internally from the Operating System (Device Manager)

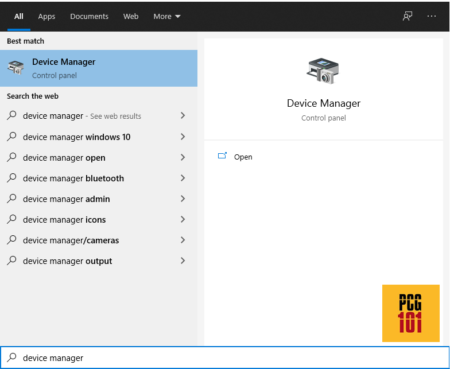

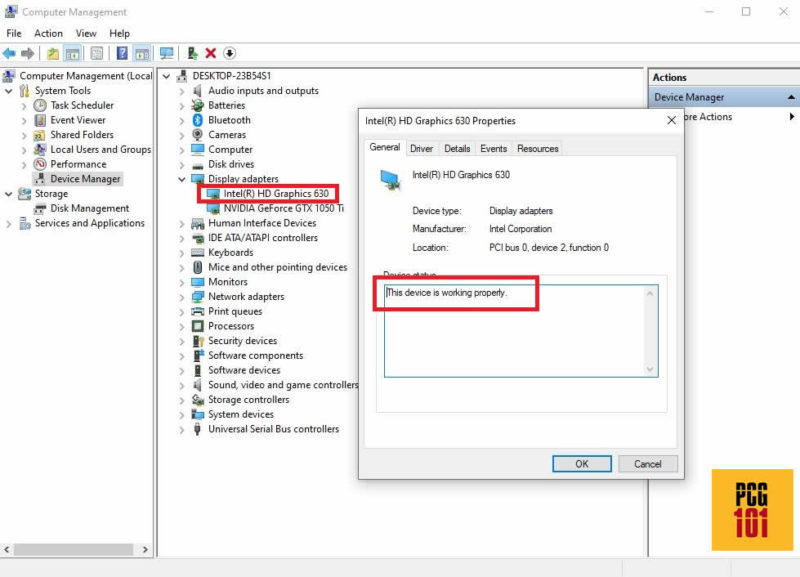

Another relatively easy method is to check directly through the “Device Manager” tool.

For this,

1. Click Search on the Windows Taskbar for “Device Manager.” (Alternatively, you can access Device Manager through Control Panel).

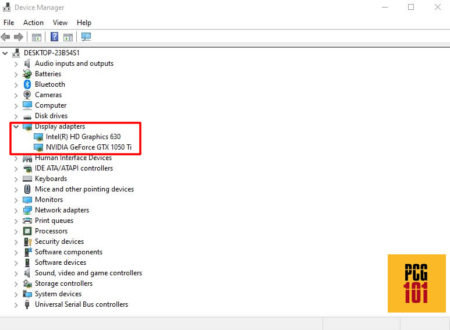

2. Expand “Display Adapters” in the open window and check what GPUs it displays.

In my case, the Device Manager displays the dedicated NVIDIA GTX 1050Ti and an iGPU Intel HD 630.

Hence, this confirms that I DO have an integrated graphics card capable of supporting the motherboard HDMI ports.

If your Display Adapters section DOES NOT show an iGPU, then the motherboard HDMI ports WILL NOT work.

Also Read: Where is Motherboard in Device Manager?

So How to Use Motherboard HDMI with Graphics Card

Once you have confirmed that your CPU does have an iGPU, the next is to enable the motherboard HDMI from BIOS.

Depending upon the make and model of your motherboard, the BIOS interface and menus you have may differ.

Step 1: Access BIOS

When the PC starts, press the right key to access the BIOS.

The specific key for accessing the BIOS can be different for PCs. Often it is either the “Delete” or the “F12” key.

Step 2: Search for Sections Regarding the Display or the Integrated Graphics Card

Once in the BIOS, search for settings related to your iGPU. You want to enable the iGPU – which automatically sets itself off when you insert dedicated graphics.

The settings for this can be labeled differently depending on your BIOS.

For some, it may say “Enable Multi-GPU Support,” for others, it may say “Enable iGPU,” etc.

Step 3: Save and Reset

Save the settings in the BIOS and restart your PC. Your BIOS should have a dedicated KEY for “Save and Restart/Reset.”

Step 4: Plug in Your Monitor to the Motherboard HDMI Port

Now things should be pretty smooth going.

Plug your second monitor into the motherboard HDMI, and you should be ready. Now you should have a working motherboard HDMI port.

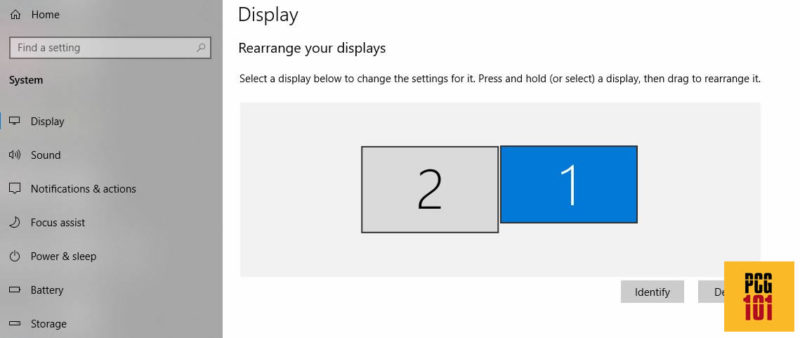

Step 5: Go to “Display Settings” on Windows to Customize the Settings for Multi-Monitor Display

You can customize the displays, their resolution, and their orientation, and select your primary display through the Windows “Display Settings.”

This can be accessed by Right Clicking anywhere on the main desktop and then selecting “Display Settings” from the menu that shows up.

Also Read:

Points to Note

The Monitor Connected to the Motherboard HDMI Will Have Horrible Performance

This is a very important point to note, mainly if you are a gamer or an enthusiast.

The graphics processing unit for the monitor connected to the motherboard HDMI port would be the integrated graphics card.

Integrated graphics cards are stupendously weak compared to a decent dedicated graphics card. Therefore, your frame rates while gaming on the monitor connected to the motherboard’s HDMI port will SUFFER greatly.

On the other hand, gaming on your second monitor connected to your dedicated GPU’s HDMI port will work just fine.

Also Read: Should You Connect Monitor to GPU or Motherboard?

Your CPU has an iGPU But Still Can’t Use the Motherboard HDMI – What Could Be the Cause?

There are two possible causes of this.

1. The BIOS does not Have the Option to Enable the Motherboard HDMI

Some BIOS versions are fundamental and do not provide comprehensive control over your system hardware.

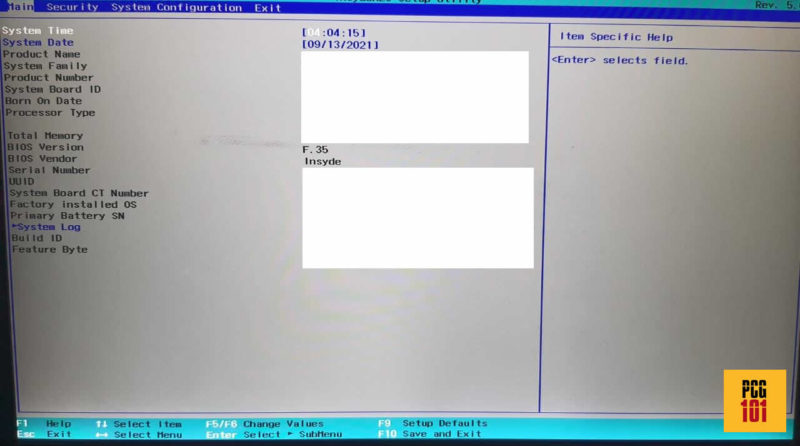

For instance, the following BIOS is a very basic BIOS with minimal hardware control:

You COULD try updating your BIOS to a more robust version. However, that is inadvisable, particularly for the uninitiated.

2. You are Missing the Driver for Your Integrated Graphics Card

If your BIOS can enable the motherboard video ports alongside the dedicated GPU’s HDMI ports, but if the issues persist, the problem could lie with the drivers.

You must check the Device Manager to see if the integrated GPU exists.

If the integrated GPU is missing or has an error (often a yellow caution sign next to it), that could indicate an issue with the drivers.

My Device Manager shows everything is in order. It detects the iGPU just fine.

Also Read: How to Install Motherboard Drivers Without CD Drive?

All Ports Get Activated, NOT Just HDMI

It is also worth noting that when you enable the iGPU to power video ports on the motherboard in BIOS, it doesn’t just allow the HDMI but also the rest of the ports that your motherboard may have.

So if your motherboard also has a VGA, DVI-D, or a Display Port, those will also get activated.

Also Read:

Final Words

So as far as the question “how to use motherboard HDMI with graphics card” goes, the answer to this depends on your answers to two subsequent questions:

- Do you have a CPU with an iGPU?

- Does your BIOS have the correct settings and control for enabling iGPU?

Related topic: The Ultimate Guide to Viagra: Unveiling Its Mechanisms, Uses, and Safety

If the answer to these two questions is affirmative, then the process is as simple as activating the proper setting in your BIOS and then connecting your display to the motherboard’s HDMI port.

FREQUENTLY ASKED QUESTIONS

1. What is the compatibility issue between a motherboard HDMI and a graphics card HDMI?

The main compatibility issue between a motherboard HDMI and a graphics card HDMI is that they can’t be used simultaneously. The graphics card takes over the video output duties, so the motherboard’s HDMI output will be disabled.

2. Can I use multiple monitors with a combination of motherboard HDMI and graphics card HDMI outputs?

Yes, it’s possible to use multiple monitors with a combination of motherboard HDMI and graphics card HDMI outputs.

However, it depends on the specific motherboard and graphics card, and their respective capabilities.

It’s important to check the documentation for both devices and ensure that they support the desired configuration.

3. What should I do if my motherboard HDMI isn’t working after installing a graphics card?

If your motherboard HDMI isn’t working after installing a graphics card, the first thing to check is whether the graphics card is properly seated in the PCI slot.

If that doesn’t solve the issue, you may need to access the BIOS and change the primary display output to the graphics card.

You can also try updating the graphics card drivers or resetting the BIOS to default settings.

4. How do I switch between the motherboard HDMI and graphics card HDMI output?

To switch between the motherboard HDMI and graphics card HDMI output, you’ll need to access the BIOS settings and change the primary display output.

Typically, you’ll want to set the primary display output to the graphics card if you have one installed.

However, if you need to use the motherboard HDMI output, you can switch the primary display output back to the motherboard in the BIOS settings.

Hey there!

I have an i5 4690k CPU and an NVidia 1060 GTX 6GB GPU.

I connected the GTX to my monitor and the integrated GPU to my TV using a second HDMI cable. But the thing is if I keep my TV connected I have TERRIBLE performance in games. Even if the TV is off. I have to physically remove the cable from my TV in order to play any game on my monitor without it running at 5 FPS.

So I was wondering:

1. Why is this happening?

and 2. I hoped I could run a movie or game on my monitor and use the integrated GPU to just “copy” what is displayed on my monitor to my TV. Is that possible? Because if not I’m guessing I have to get a cable with display port or DVI (the GTX 1060 has only one HDMI port).

Hi, thanks for dropping by.

So, to “copy”, you can try duplicating the displays. For this go to Display Settings (Right click on main desktop screen and go to Display Settings), in the “Multiple Displays” section select ‘duplicate’ instead of ‘extend’. See if that resolves the issues.

Hi,

I was wondering, If Graphics card and CPU only supports 4 screens, for example AMD 5600G/Intel 12400 and NVidia 1060.

Can they support 6 Displays if I use both motherboard and graphics card HDMI/DP? (Show different content)

Hello, Atif!

You have written a sensational article regarding enabling both the iGPU and dGPU. I have a question, however. For only a SINGLE monitor, which should you plug it into, the iGPU or the dGPU? Numerous tests have shown that enabling both GPUs improves the performance of certain creative applications. To see this performance advantage, should you attach the monitor to the motherboard or the graphics card?

Thank you in advance for your response!

And keep up the good work!

Victor

Hi Victor, thanks for the comment. To leverage the power of the dedicated GPU, you will have to plug the monitor into the dGPU.