Once you spend a hefty amount on a computer part like the GPU, you want the system to utilize that new resource. In some cases, the system refuses to do so, resulting in frustration for the user. This would beg the question, why are games not using GPU after all? And how to fix this issue.

Many reasons can cause the system to use integrated graphics instead of the dedicated GPU. Some of these reasons are drivers related, and some can be cured in windows settings.

The main issue most people face is that the system will recognize the graphics card but not use it for games. This behavior may seem odd, as most computers automatically detect and install new parts.

Let us dive straight into the solutions. All you have to do is follow along and ensure you perform each task!

So Why are Games Not Using GPU and How to Fix?

There can be many reasons why your games are not using your dedicated graphics card.

I will cover some of the reasons below:

1. Connect the display to the Dedicated GPU (Not to the motherboard)

One of the primary reasons your games may not be using your graphics card is if you have connected your monitor to your motherboard’s display ports.

One common mistake of gamers is connecting the monitor to the motherboard’s display output instead of the card. The monitor will use the CPU’s integrated graphics card if it is connected to the motherboard’s video output ports.

Check whether the monitor’s wire is connected to the motherboard or the dedicated graphics card. If the wire is connected to the motherboard, unplug it and connect it to the ports on the dedicated graphics card. There is no need to restart the system.

If you already had the wire plugged into the correct port or the issue isn’t gone, move on to the next step.

Also Read: Computer Not Starting After Installing New Graphics Card?

2. Drivers Can be the Culprit

Dedicated graphics are often not shipped with the system unless you bought a prebuild PC; hence you must install the correct drivers upon plugging it in.

If connected to the internet, your operating system may find some drivers from windows updates to run the graphics card. Still, these are not necessarily the best or latest drivers.

An excellent first step is to uninstall the existing drivers; you need to uninstall the current GPU drivers and install the latest ones from the manufacturer’s website (AMD or Nvidia).

Here are the steps to uninstall the drivers:

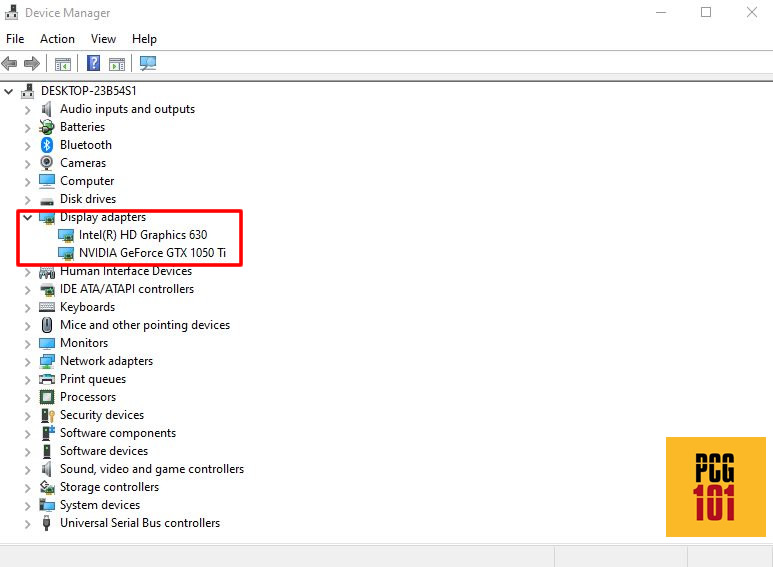

- Open the device manager (search Device Manager in the search bar or start menu)

- Expand Display adapters

- Right-click on your external GPU and click properties (from the drop-down menu)

- Click on Uninstall the device

- In the popup, check the Delete, the driver software for this device option

- Click Uninstall

- The drivers are now uninstalled

To install the latest drivers, follow these steps:

Download the auto-detection tool from the official website: AMD or Nvidia

- Download and run the tool

- Click next through the installation wizard

This process will automatically install the latest drivers for your GPU.

The following video tutorial explains how to download and install the latest drivers.

Go to the next step if you are still facing the issue.

3. Disable Integrated Graphics:

You can disable the integrated graphics if your system uses the iGPU instead of the dedicated card.

Doing this will not harm the system but will not allow the iGPU to be seen by the system.

Disabling the integrated graphics card will NOT shift all the graphics processing to the dedicated GPU. Some of that will be relegated to software-based video rendering.

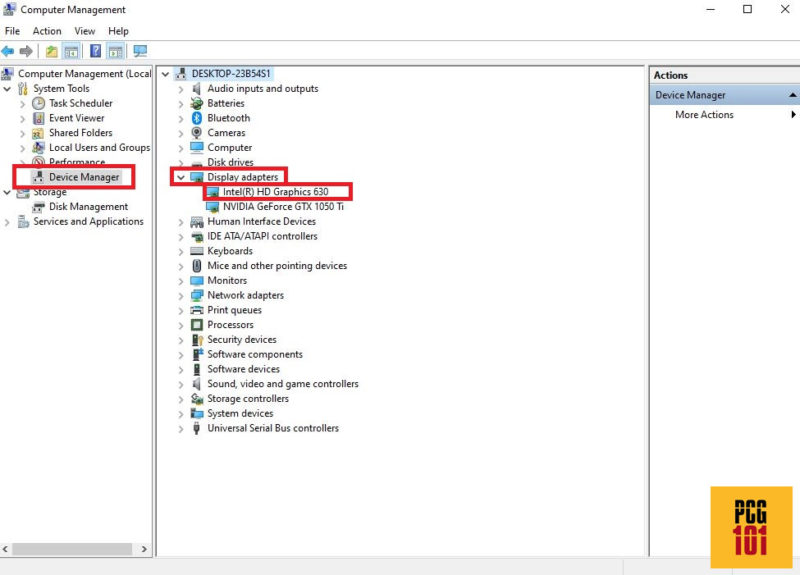

- Go to the device manager (explained earlier)

- Expand the Display adapters option

- Right-click on the integrated graphics

- Click on disable device

Upon doing so, your system will use the dedicated GPU, as no other display adapter is available.

You should now run the game, and if the issue continues, move on to the next step.

It would be best to be careful when disabling your integrated graphics card.

The following video explains how to disable your integrated graphics card:

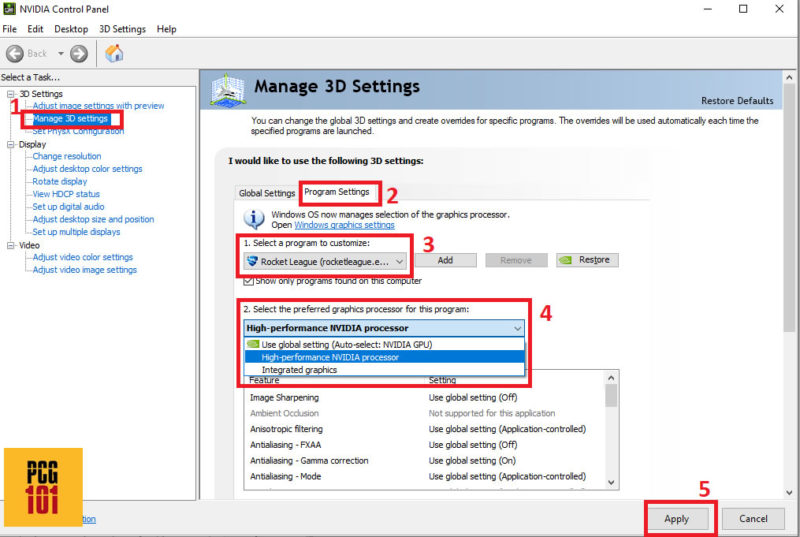

Graphics Preference Change Through the Dedicated GPU’s Control Panel

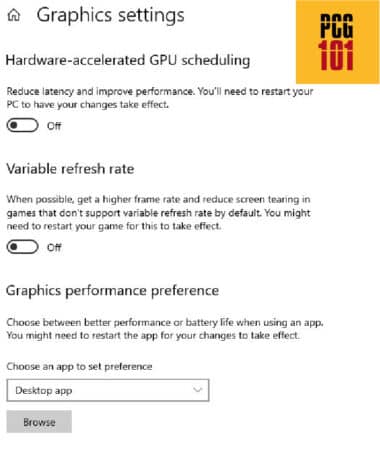

Your system may use integrated graphics by default for display and games. You can change the preferred graphics card for specific apps (games). To do this, you can follow these steps:

- Right-click anywhere on the desktop

- Click Display Settings

- Scroll down, and look for Graphics Settings

- Once in the Graphics Settings, look for the Graphics Performance Preference section

- From the drop-down menu, select Desktop App

- Click Browse

- Find the installation folder of the game

- Select the .exe file

- Click add

- Once you see the game’s icon, click the options under it

- Select High-Performance from the popup menu

This will set the preferred graphics processor for this game to be the dedicated GPU.

In addition, whether you have an NVIDIA or an AMD graphics card selected, you select the preferred graphics card from its control panel.

If this does not solve the problem, go to the next step.

5. Are You On Battery (Applicable for Laptops)

If you are on a gaming laptop, one of the biggest reasons for your games not using your dedicated graphics card could be running it on battery.

A laptop with a powerful dedicated graphics card running on battery will only be limited to using the integrated graphics.

The dedicated graphics cards on most, if not all, gaming laptops only run if your computer is connected to the power socket.

6. Are You Using the Correct PCIe Slot for Your Graphics Card?

One fundamental reason why your games may not be using graphics card is if it is placed in an incorrect slot. A typical graphics card requires an x16 slot to function correctly.

However, not all x16 slots are the same. While an x16 space has 16 PCIe lanes, some x16 places only feature 4.

Installing a dedicated graphics card in a PCIe slot with only four lanes will render it inoperable or reduce its functionality.

For instance, your grapMotherboardill will only work with the motherboard above if installed in the first x16 slot.

Also Read: Computer Turns on But No Display – How to Fix?

7. BIOS Settings

If all of the steps above fail to make a difference, the problem is most probably caused by the BIOS.

What happens is that the motherboard has the integratMotherboard set as the priority and the PCI express as the second. Priority is the setting that decides which component will be used and which one will be on standby.

To enter the BIOS, follow these steps:

- Go to Updates and Security in windows settings

- Click on Recovery

- Click on Restart now under Advanced Startup

- Click on Troubleshoot and then Advanced Options

- Click on UEFI Firmware to enter the BIOS

Depending on your PC make and model, you can also enter BIOS at system startup by pressing DEL, F2, F1, or the ESC key.

To tweak the settings, follow these steps:

- Look for Peripherals or IO Devices (every motherboard has this under aMotherboardname)

- Once there, look for the Primary display (the name may vary a little)

- When you find this, click on the menu, and select PCI express as your primary display

- Save and exit the BIOS (press F10)

Note that not all motherboards have a comprehensive BIOS.

After you have completed these steps, your BIOS will now have the PCIe slot as the primary display output. You can now boot up your system and test the games.

If the issue has not been resolved, some hardware-level problems are likely causing it.

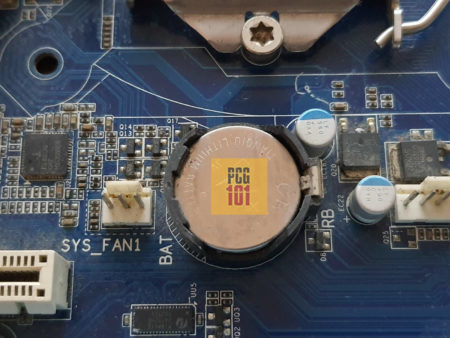

8. Reset BIOS By Removing CMOS Battery

You can try resetting the BIOS by removing the CMOS battery from the motherboard for 5 seconds.

TMotherboardtively straightforward. Locate the large CMOS battery on the motherboard.

Please remove the motherboard and press on the lock/latch using a precision screwdriver.

Removing the CMOS battery will bring the BIOS back to its default configuration and should fix any underlying issues with wrong hardware settings.

Other Things to Try

It could get very frustrating to figure out why games are not using GPU, mainly if you have gone through all the troubleshooting steps above.

There are a few other procedures that you can adopt to ensure that the GPU is connected properly.

- Check the wires: Changing the cables that connect the GPU to the monitor may solve the problem, especially if the wires are old.

- Check if the card is inserted correctly: You can check whether the GPU is inserted into the PCIe slot. Sometimes, the gold strip of the GPU is not entirely in contact with the PCIe connectors. A symptom of this issue is the GPU not showing in the device manager under the Display adapter.

- Run the GPU on another slot: Multiple PCIe slots are often on a motherboard (ATX). You can insert the card into another space if you have a motherboard with this feature. If the issue persists, your GPU is most likely to blame.

FREQUENTLY ASKED QUESTIONS

1. How can you tell if a game is not using the GPU?

You can tell if a game is not using the GPU by checking the performance and resource usage of the game while it is running. If the CPU usage is high and the GPU usage is low, it is likely that the game is not using the GPU as much as it should be.

2. What are the potential causes of a game not using the GPU?

There are several potential causes of a game not using the GPU, including outdated or malfunctioning drivers, incorrect or outdated settings, hardware limitations, or conflicts with other software or hardware components.

3. What are some common settings to adjust to ensure that a game is using the GPU?

Some common settings to adjust to ensure that a game is using the GPU include enabling hardware acceleration in the game settings, setting the correct graphics card as the default GPU, adjusting the power management settings to allow for maximum GPU performance, and updating the graphics drivers to the latest version.

4. Can outdated or malfunctioning drivers affect GPU usage in games?

Yes, outdated or malfunctioning drivers can affect GPU usage in games. It is important to regularly update drivers to ensure that the GPU is functioning properly and being used to its full potential.

5. Are there any tools or software programs that can help diagnose and fix GPU usage issues in games?

Yes, there are several tools and software programs that can help diagnose and fix GPU usage issues in games.

These include GPU-Z, MSI Afterburner, and NVIDIA Inspector, which can provide information on GPU usage and temperature, as well as allow users to adjust settings to optimize GPU performance.